Microsoft vs Google: where is the battle for the ultimate AI assistant taking us?

Tech editor Jonathan Bell reflects on Microsoft’s Copilot, Google’s Gemini, plus the state of the art in SEO, wayward algorithms, video generation and the never-ending quest for the definition of ‘good content’

This year, Microsoft turns 50, a significant waypoint in the valley of anguish that is Gen X middle age. In comparison, Generation Z Google (aged 26 and a half) is in the prime era of its shape-shifting, multifarious journey. Both companies are in a celebratory mood, with Microsoft issuing a clutch of microsites and retrospectives bathed in a golden glow, and Google consolidating its two-fisted grip on AI and AI-enabled hardware. As chance would have it, both Google and Microsoft have new, albeit virtual, product to talk about in the perennially fascinating realm of artificial intelligence. We sat down with key players from each company in an attempt to ascertain the status quo.

Paul Allen and Bill Gates founded Microsoft in 1975

Google’s latest innovation is an update to Gemini Live, a service offered to its coterie of Gemini Advanced subscribers, as well as those who own a Google Pixel 9 (or new Pixel 9a) or Samsung’s Galaxy S25. Essentially, Live allows you to talk to the smart assistant in natural, everyday language, requesting answers and information and have ‘her’ respond in real time. The latest update adds video as a means of conveying information, in addition to the text, image and voice commands it already understands.

Google's Pixel 9 Pro, a phone that wants you to use AI

This is a realisation of some of the capabilities demonstrated last year in Project Astra, Google’s ongoing research into the idea of a true AI assistant. Up at Google’s central London HQ, we’re shown a few demos, with a Pixel phone relaying info live to Gemini via its camera whilst Google’s rep asks questions. ‘What cocktail can I make with these mixers and spirits – something that’s not too sweet’, or ‘What can I cook with the contents of my fridge, and what wine would go best with that?’

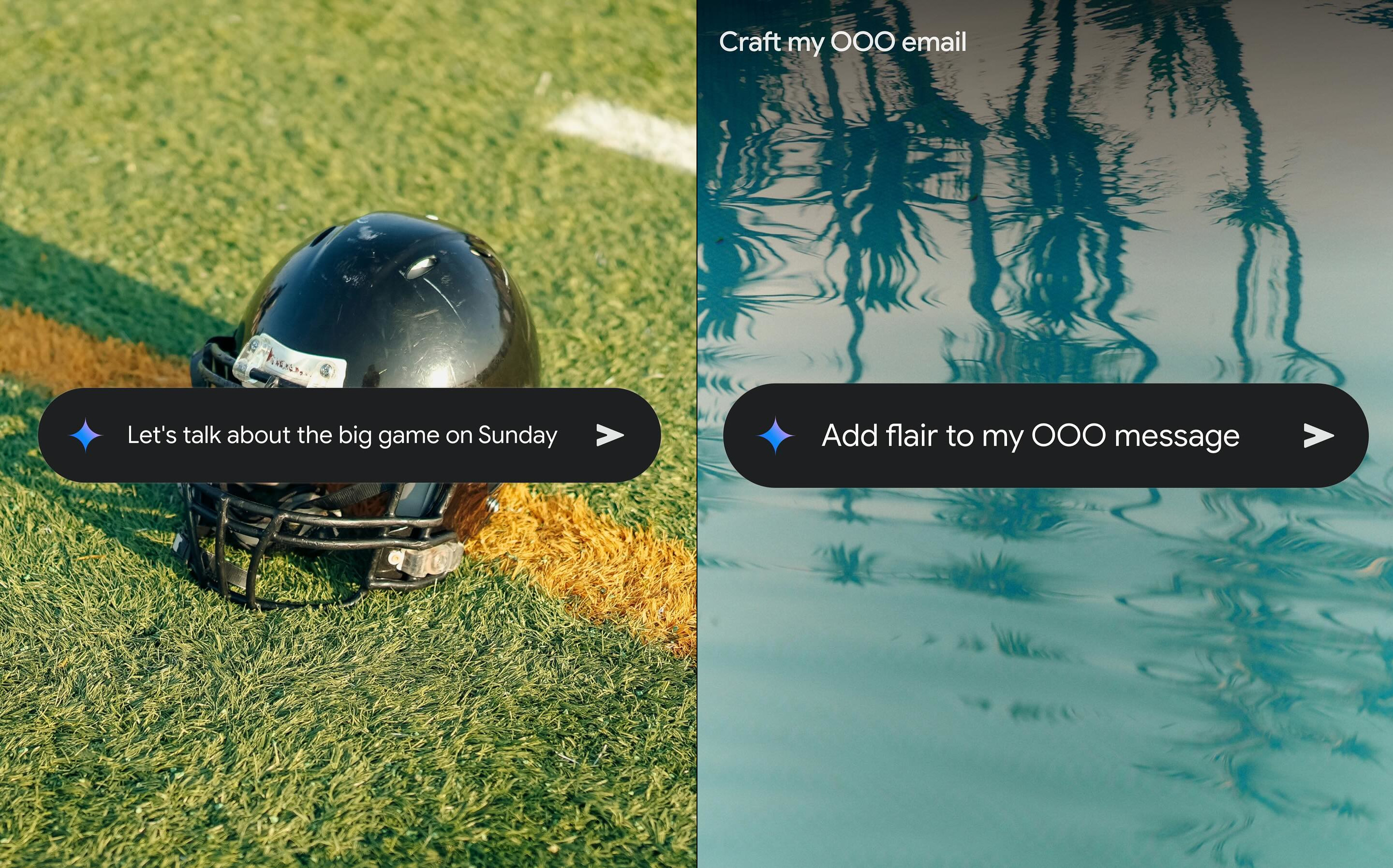

Gemini and its suggested use cases

It is impressive stuff, no question, although Gemini isn’t studying every single frame of the video – more like capturing a series of stills that it can then parse according to its large language model. There are still quite long, awkward silences between queries, as some distant server scrobbles around to assemble your answer. Is it a true assistant? In a sense, yes, although Google admits that the ability to act on certain commands across different apps (eg, ‘Gemini, book me a flight to Paris getting in tomorrow before 4pm’) still eludes it. ‘We can make tweaks,’ says Google’s spokesman, admitting that ‘it could be quicker. Gemini couldn’t be a tennis line judge, for example.’

Gemini and its suggested use cases

The man from The Guardian wanted to know if Gemini could ‘watch’ a game of football and generate a live blog

For now, Gemini can only store the last seven minutes of visual memory, although text conversations and transcripts live practically forever in the cloud. It’s all part of a move to making AI ‘remember’ and be able to infer context and subject from previous conversation, things and places so that it can pick up where you left off. What Gemini can’t do now is surely on a product roadmap somewhere. As the dev team readily admits, a substantial aspect of AI research comes from seeing what users deploy the technology on in the wild. The man from The Guardian wanted to know if Gemini could ‘watch’ a game of football and generate a live blog. The woman from the FT was concerned about the privacy implications of allowing an all-seeing eye into your home and processing what the contents on a distant server. Would this data be used for training? Apparently not.

An AI image from Google's online documentation

AI: the Microsoft approach

A few days later, we sit down with Lucas Fitzpatrick, Creative Director and Design at Microsoft AI. In the battle of advanced digital assistants, it’s probably too close to call between Google’s Gemini and Microsoft’s Copilot. Both enjoy a penchant for whizzy demos, along with corresponding controversies, and yet neither AI tool has really entered mainstream usage. Microsoft has baked Copilot into its Office apps, while Gemini can now be found into Google Suite. Do you know anyone who uses them?

Microsoft is definitely being a bit edgier with the related recent introduction of Recall, a controversial function that captures regular screenshots of your PC to create a massive database that serves as a visual and mental back-up of what you’ve been up to. It’s meant to help search. Needless to say, privacy campaigners are not impressed.

Wallpaper* Newsletter

Receive our daily digest of inspiration, escapism and design stories from around the world direct to your inbox.

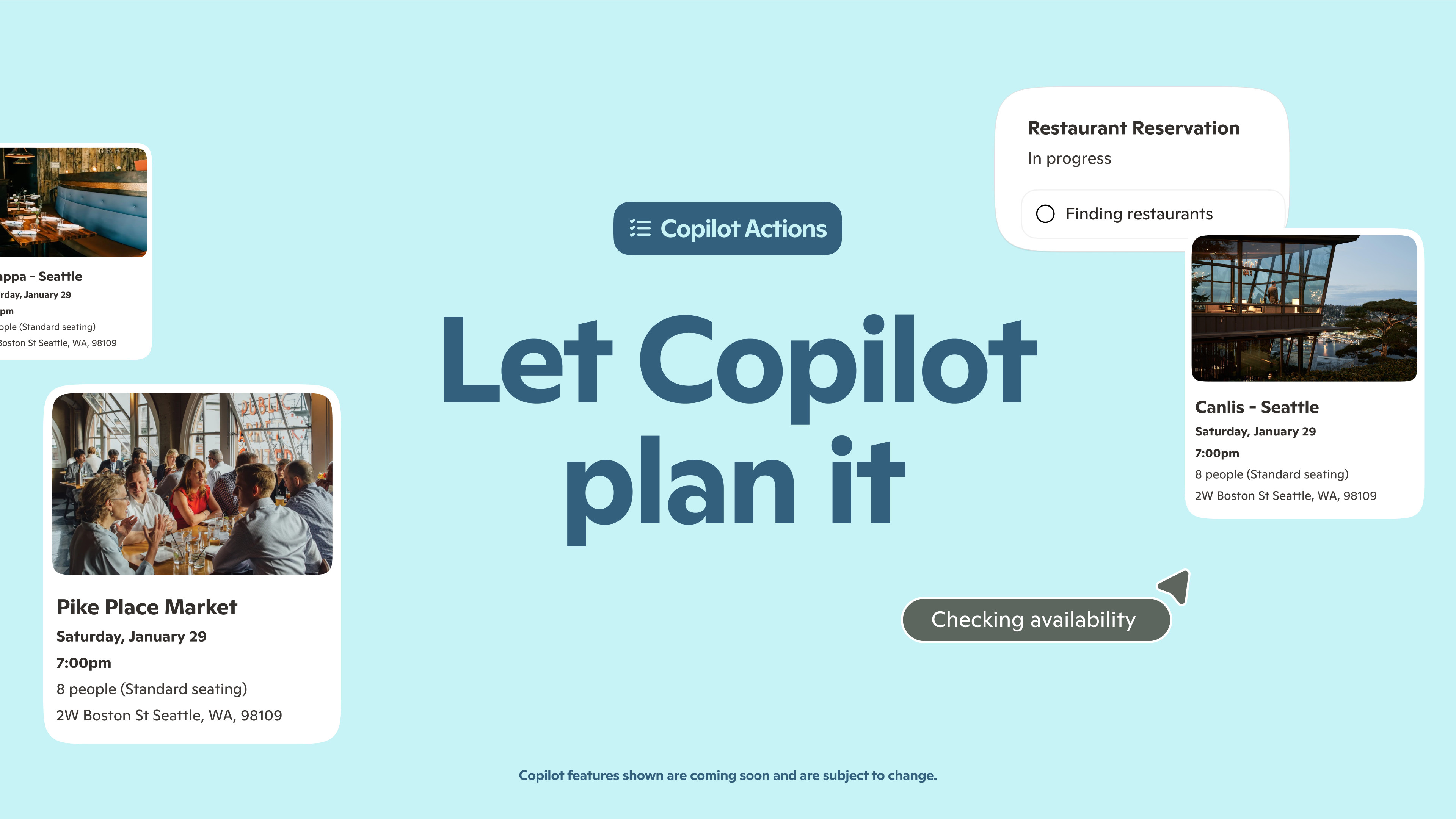

Copilot: 'your AI companion'

For a designer like Fitzpatrick, our use of AI poses many intriguing problems. ‘What does it mean for interaction and design?’ he asks rhetorically. ‘As designers its such an interesting time.’ To make it clear, we’re not discussing the role of AI as a creative tool here; this is about how users interact with devices in ways that frequently go beyond pushing pixels around a screen. ‘We can now create sentences that evoke feelings,’ Fitzpatrick says. ‘It’s such a big shift.’

Copilot: 'your AI companion'

The end goal – one that is increasing in complexity every day – is for Copilot to be a true digital companion, an AI-driven assistant that provides context-related help, aids accessibility, simplifies everyday computer use and – crucially – builds something akin to an emotional relationship with the user. ‘We’re moving from an era of search into an era of normal conversation,’ Fitzpatrick says. ‘As designers, we’re looking at what that journey could be like.’

In a similar vein to Gemini Live, there’s Copilot Vision for sharing and decoding video, whilst other AI toolsets and features criss-cross the aisle between the various tech giants, making it hard to say who exactly thought of something first (both Google and Microsoft can conjure up AI-generated podcasts, for example, giving you ‘an easy, engaging and different way to consume information with minimal effort’, according to Microsoft.

Microsoft at 50

An early Microsoft logo

On a very simple level, Fitzpatrick and his team started with the visual branding of Copilot, two intertwined rainbow-coloured ribbons. ‘The colours speak to endless possibilities, whereas the form is the symbolism of the handshake,’ says Fitzpatrick. ‘How can we signal the future is friendly.’ For a designer, the move to voice-based computing is not without its challenges and rewards (Fitzpatrick has enjoyed working with voice talents to help shape the Copilot ‘voice’). ‘We’re not going to end the visual experience. But we might spend less time looking at screens – which I think is a wonderful thing,’ he says. Nevertheless, typography, animation, colour palettes, icons and navigation all have to be considered.

‘What if AI had a visual form that was created for you, by you?’

Lucas Fitzpatrick, Creative Director and Design at Microsoft AI

Copilot Appearances offers customisable AI avatars

There’s more. ‘AI is one system created for millions of people,’ Fitzpatrick continues, ‘but what if it had a visual form that was created for you, by you? That’s very exciting.’ He’s talking about Copilot Appearances, a tech demo shown earlier this month wherein the user can create an avatar for ‘their’ AI. ‘We’re thinking about this as a human-centred personal experience,’ he says, ‘bringing a warmth, an accessibility and a non-tech feeling to what it means to interact with AI. AI can feel cold and sterile. The underlying technology will move forwards, but [for a user] the difference is whether it [feels] aligned to them and their goals. Our team is really deeply invested in these questions.’

AI imagery from Microsoft's Copilot documentation

Would you like some help with that?

It's a brave new world of digital assistance, although let’s not forget that Microsoft has been ploughing this furrow for decades – the company even went as far as summoning Clippy, its dreaded Windows help assistant from 1995, in its Copilot Appearances demos. However you choose your avatar to appear, the aim is to increase its connection to you and you alone. As Mustafa Suleyman, Executive Vice President and CEO of Microsoft AI, put it, ‘with your permission, Copilot will now remember what you talk about, so it learns your likes and dislikes and details about your life: the name of your dog, that tricky project at work, what keeps you motivated to stick to your new workout routine’.

Microsoft Copilot presentation

So is everything rosy in the world of AI? Skip the rampant energy consumption, privacy concerns, sketchy copyright issues and little understood but very real problems like hallucinations, it feels as if AI is being rendered down as two distinct paths, assistance and creation. Here we run up against a bit of a culture clash. Google split its demo session between ‘traditional’ media (including yours truly) and a clutch of ‘content creators’. In a very real sense, it feels as if we're a part of the real-time vivisection of the media industry, with the former group committed to reporting and the latter to creating. Except there are no prizes for guessing which one Google appears to be betting on.

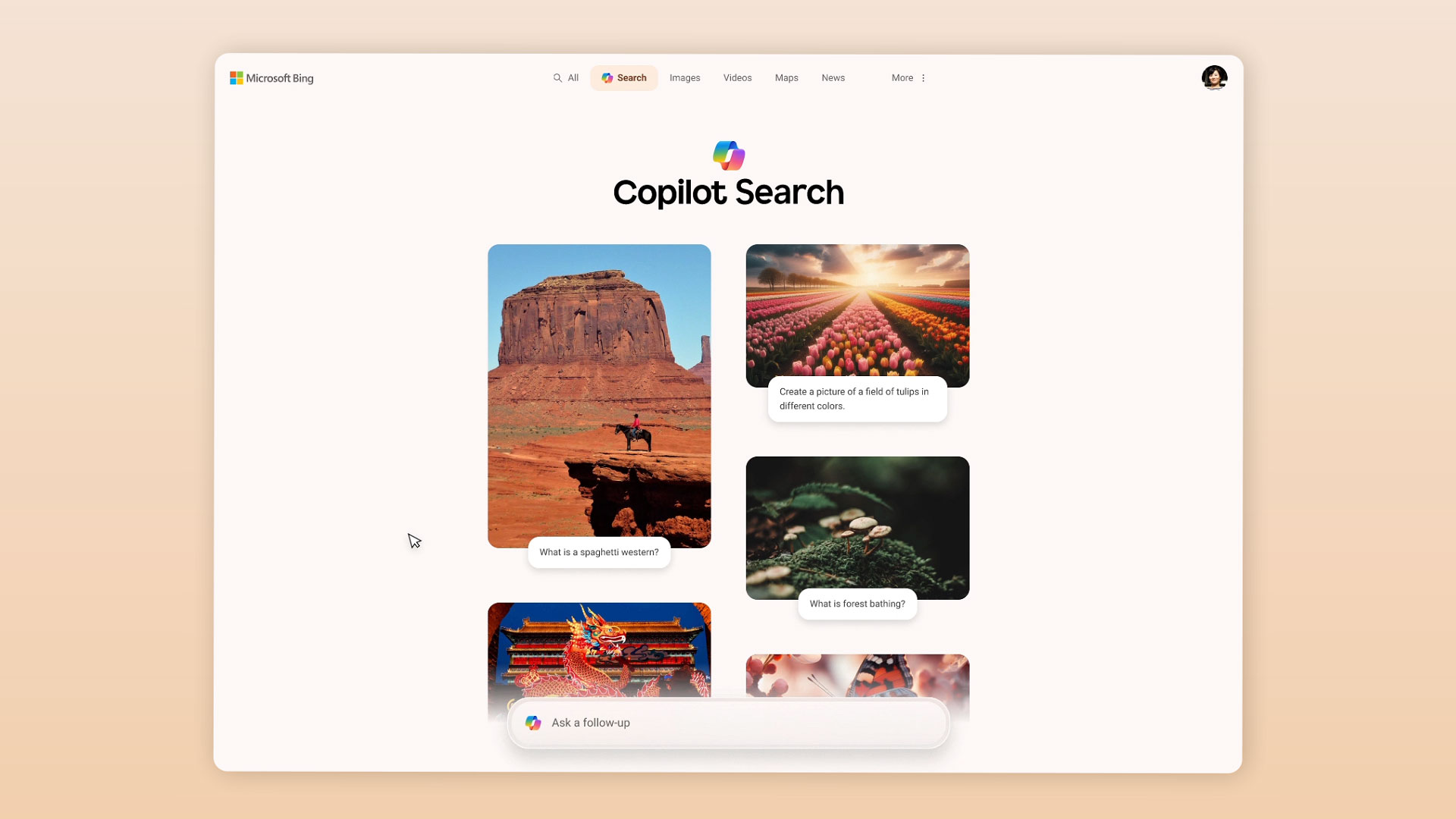

Copilot-driven search is already here

[There’s an] ongoing game of global whack-a-mole, whereby Google updates its algorithm and millions of websites change their practices

Google itself has admitted that the latest update to its ‘algorithm’, the feared and dreaded backroom machinations that drive its vast search empire, are intended to ‘continue our work to surface more content from creators through a series of improvements throughout this year’. It’s part of an ongoing game of global whack-a-mole, whereby Google updates its algorithm and millions of websites change their practices in order to stay within its (sometimes surprisingly myopic) sights. Unsurprisingly, people do unscrupulous things for attention and have done since the earliest days of the web, and part of the 'algo update' is to ferret these out.

There are other unwanted diversions. Woven in amongst the myriad, ever-changing SEO strategies is a firehose of AI-generated slop, an infinite layer of slurry that’s in danger of coating everything with misdirection, misinformation and general misery for those in the business of ‘creating content’ the old-fashioned way. Although the system is designed to weed out the slop before it gets into your feed, it doesn’t always work, much like the British water companies and their attitude to sewage in rivers.

What is ‘good content’?

An AI image from Google's online documentation

That takes us to the crux of the matter: what Google defines as 'great content' isn’t entirely clear. AI or no AI, there’s a nagging suspicion that the company doesn’t really rate or rank traditional journalism. Click around this website and you’ll find thousands and thousands of stories, lovingly researched and written over two decades by nearly 500 writers. With a flick of a switch in some distant data centre, the global ability to discover this – and hence its appetite for it – can be dialled up or down. Fluctuating, unpredictable traffic is not good news for a website, especially when those fluctuations have no obvious correlation with the quality and quantity of the output. It becomes a sort of death spiral, with sites that fall out of the spotlight in danger of falling by the wayside, withering and dying when their content is unwittingly overlooked.

An AI image from Google's online documentation

What exactly is 'good content'? It would be the work of a moment to ask an AI to conjure up some fantastical imagery for this piece, but it still feels like a form of cheating and certainly nothing that could be described as 'good'. Instead, we’ve chosen imagery from Microsoft and Google, some of which will inevitably have been machine generated. However, just as there should be more to journalism than re-sizing jpgs and pondering likely search terms, ‘creativity’ shaped by prompts still feels awfully lacking. It still takes a lot of human input to make machine-generated output feel in any way clever. Let's hope it's always that way. Compare the video for Pulp’s comeback single, 'Spike Island', which cleverly plays on AI’s default uncanny awkwardness (so Jarvis). Contrast it with this trailer for 'Giraffes on Horseback Salad', an AI-driven interpretation using Google’s new Veo 2 generative video model of Salvador Dalí’s unrealised 1937 film script. It should be a good match, no? No.

As of this week, you can ask Veo 2 to create eight-second snippets of an imaginary action movie, pseudo-Pixar animation or psychedelic imagery. There’s also Whisk Animate, one of Google’s many open experiments, which adds the ability to tune your ‘film’ with imagery as well as text. Of course, this is invariably quite entertaining. However, the knowledge that somewhere, your trivial prompts are consuming vast amounts of energy to create something so fundamentally without value quickly takes the edge off the fun.

Read all about it: Google Gemini

Google is (unsurprisingly) cagey about how much energy all this consumes, although it admits that dealing with video is much more data- and processing-intensive than working with stills. When we asked Gemini what Google’s goals were for AI, it (she?) replied that the company wanted ‘to develop artificial intelligence that fundamentally enhances humanity's ability to access, understand, and utilise information, leading to breakthroughs that solve major world problems and create profoundly helpful tools and experiences for everyone, developed and deployed responsibly’.

Noble words, even if they were spoken by a machine trained on millions of corporate PowerPoint decks. When you next ask yourself what AI can do for you, consider the contradictions of an industry that pushes brave new approaches to content creation with one hand and blanks traditional creators with the other. Google and Microsoft both want their AI to be all things to all people, seemingly not happy until their customer base is fractured to the point that everyone has become some form of creator. Wherever you hope or fear AI goes next, be wary of accepting its unsolicited offers of help.

Copilot.Microsoft.com, MicrosoftCopilot

Jonathan Bell has written for Wallpaper* magazine since 1999, covering everything from architecture and transport design to books, tech and graphic design. He is now the magazine’s Transport and Technology Editor. Jonathan has written and edited 15 books, including Concept Car Design, 21st Century House, and The New Modern House. He is also the host of Wallpaper’s first podcast.

-

Seven things not to miss on your sunny escape to Palm Springs

Seven things not to miss on your sunny escape to Palm SpringsIt’s a prime time for Angelenos, and others, to head out to Palm Springs; here’s where to have fun on your getaway

By Carole Dixon

-

‘Independence, community, legacy’: inside a new book documenting the history of cult British streetwear label Aries

‘Independence, community, legacy’: inside a new book documenting the history of cult British streetwear label AriesRizzoli’s ‘Aries Arise Archive’ documents the last ten years of the ‘independent, rebellious’ London-based label. Founder Sofia Prantera tells Wallpaper* the story behind the project

By Jack Moss

-

Head out to new frontiers in the pocket-sized Project Safari off-road supercar

Head out to new frontiers in the pocket-sized Project Safari off-road supercarProject Safari is the first venture from Get Lost Automotive and represents a radical reworking of the original 1990s-era Lotus Elise

By Jonathan Bell

-

The new Google Pixel 9a is a competent companion on the pathway to the world of AI

The new Google Pixel 9a is a competent companion on the pathway to the world of AIGoogle’s reputation for effective and efficient hardware is bolstered by the introduction of the new Pixel 9a, a mid-tier smartphone designed to endure

By Jonathan Bell

-

Artist Lachlan Turczan and Google's 'Making the Invisible Visible' at Milan Design Week 2025

Artist Lachlan Turczan and Google's 'Making the Invisible Visible' at Milan Design Week 2025All that is solid melts into air at Garage 21 in Milan as Google showcases a cutting-edge light installation alongside a display of its hardware evolution and process

By Jonathan Bell

-

Layer conceptualises a next-gen AI-powered device: introducing the PiA

Layer conceptualises a next-gen AI-powered device: introducing the PiAPiA, the Personal Intelligent Assistant, is a conceptual vision of how AI might evolve to dovetail with familiar devices and form factors

By Jonathan Bell

-

OpenAI has undergone its first ever rebrand, giving fresh life to ChatGPT interactions

OpenAI has undergone its first ever rebrand, giving fresh life to ChatGPT interactionsA new typeface, word mark, symbol and palette underpin all the ways in which OpenAI’s technology interacts with the real world

By Jonathan Bell

-

Yves Béhar describes his approach to design, built around a core of sustainable processes and positive social impact

Yves Béhar describes his approach to design, built around a core of sustainable processes and positive social impactYves Béhar is the Swiss-born American founder of Fuseproject, a San Francisco-based multidisciplinary design studio with an outpost in Lisbon. Béhar's work is held in collections at both MoMA and SFMoMA and includes everything from mobility design to medical technology, robotics and high tech start-ups

By Yves Béhar

-

Wallpaper Design Awards 2025: In tech, we’re worshipping at the altar of inanimate objects, not smart devices

Wallpaper Design Awards 2025: In tech, we’re worshipping at the altar of inanimate objects, not smart devicesThe very best contemporary technology, as celebrated by the 2025 Wallpaper* Design Awards and detailed by tech editor Jonathan Bell – watch the video

By Jonathan Bell

-

At CES 2025, Nvidia accelerates towards an AI-driven, robotic-powered autonomous future

At CES 2025, Nvidia accelerates towards an AI-driven, robotic-powered autonomous futureNvidia reveals a personal AI supercomputer, digital replicants of the physical realm, and chips to give cars and robots their long-awaited true autonomy

By Jonathan Bell

-

Year in review: top 10 gadgets and tech of 2024, as chosen by technology editor Jonathan Bell

Year in review: top 10 gadgets and tech of 2024, as chosen by technology editor Jonathan BellThe very best of 2024’s gadget and technology launches and stories, from emerging AI to retro gaming, laser projectors and musician’s side projects

By Jonathan Bell